Acl Time Slots

NEW: Checkout the new blogpost by tutorial chairs describing detailed modalities of ACL2020 tutorials.

- An access control list (ACL) consists of one or more access control entries (ACEs) that collectively define the network traffic profile. This profile can then be referenced by Cisco IOS XR Software software features such as traffic filtering, priority or custom queueing, and dynamic access control. Each ACL includes an action element (permit.

- Even as ACL delivers on its commitment to genre diversity, it still leaves women and artists of color on the fringes. Giving women equal time on the stage—in all time slots—certainly.

The following tutorials will be held on Sunday, July 5th, 2020. Checkout this blog post by tutorial chairs to learn more about the new virtual format of tutorials.

Tutorial Schedule

The Online Coordination System (OCS) is a multi-coordinator online web portal, used at 118 airports in 31 countries worldwide. The site features live online access to the coordination database, with instant, real-time slot availability.

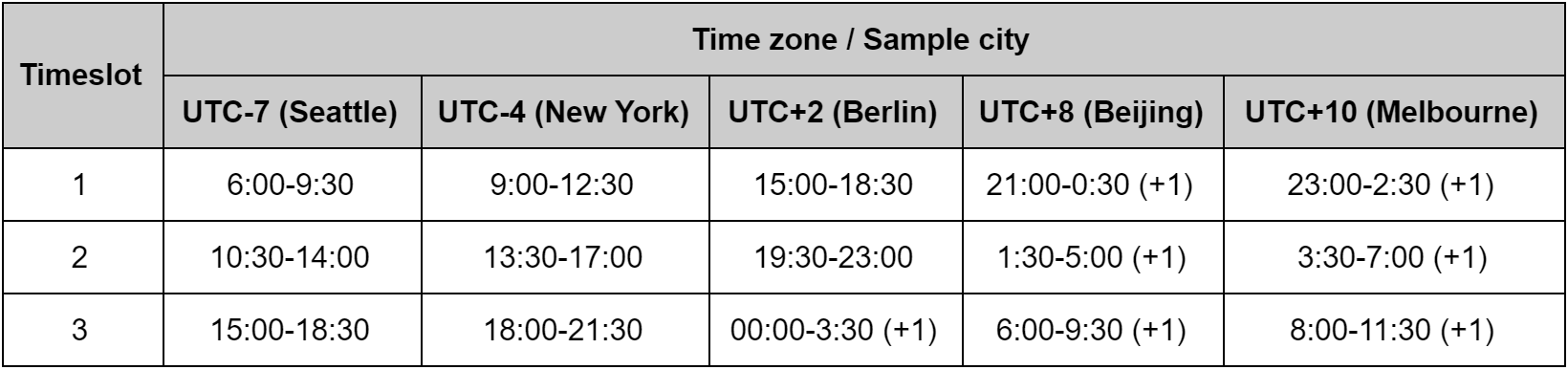

Note that the time slots are mentioned in Seattle, Pacific Daytime Time (UTC/GMT-7). Some tutorials are held twice and some are held once.

6:00 AM to 9:30 AM (Seattle Time)

T1: Interpretability and Analysis in Neural NLP

T5: Achieving Common Ground in Multi-modal Dialogue

T7: Integrating Ethics into the NLP Curriculum

10:30 AM to 2:00 PM (Seattle Time)

T1: Interpretability and Analysis in Neural NLP

T3: Reviewing Natural Language Processing Research

T4: Stylized Text Generation: Approaches and Applications

T7: Integrating Ethics into the NLP Curriculum

3:00 PM to 6:30 PM (Seattle Time)

T2: Multi-modal Information Extraction from Text, Semi-structured, and Tabular Data on the Web

T5: Achieving Common Ground in Multi-modal Dialogue

T6: Commonsense Reasoning for Natural Language Processing

T8: Open-Domain Question Answering

Tutorial Details

T1: Interpretability and Analysis in Neural NLP (cutting-edge)

Organizers: Yonatan Belinkov, Sebastian Gehrmann and Ellie Pavlick

While deep learning has transformed the natural language processing (NLP) field and impacted the larger computational linguistics community, the rise of neural networks is stained by their opaque nature: It is challenging to interpret the inner workings of neural network models, and explicate their behavior. Therefore, in the last few years, an increasingly large body of work has been devoted to the analysis and interpretation of neural network models in NLP. This body of work is so far lacking a common framework and methodology. Moreover, approaching the analysis of modern neural networks can be difficult for newcomers to the field. This tutorial aims to fill this gap and introduce the nascent field of interpretability and analysis of neural networks in NLP. The tutorial will cover the main lines of analysis work, such as structural analyses using probing classifiers, behavioral studies and test suites, and interactive visualizations. We will highlight not only the most commonly applied analysis methods, but also the specific limitations and shortcomings of current approaches, in order to inform participants where to focus future efforts.

T2: Multi-modal Information Extraction from Text, Semi-structured, and Tabular Data on the Web (Cutting-edge)

Organizers: Xin Luna Dong, Hannaneh Hajishirzi, Colin Lockard and Prashant Shiralkar

The World Wide Web contains vast quantities of textual information in several forms: unstructured text, template-based semi-structured webpages (which present data in key-value pairs and lists), and tables. Methods for extracting information from these sources and converting it to a structured form have been a target of research from the natural language processing (NLP), data mining, and database communities. While these researchers have largely separated extraction from web data into different problems based on the modality of the data, they have faced similar problems such as learning with limited labeled data, defining (or avoiding defining) ontologies, making use of prior knowledge, and scaling solutions to deal with the size of the Web. In this tutorial we take a holistic view toward information extraction, exploring the commonalities in the challenges and solutions developed to address these different forms of text. We will explore the approaches targeted at unstructured text that largely rely on learning syntactic or semantic textual patterns, approaches targeted at semi-structured documents that learn to identify structural patterns in the template, and approaches targeting web tables which rely heavily on entity linking and type information. While these different data modalities have largely been considered separately in the past, recent research has started taking a more inclusive approach toward textual extraction, in which the multiple signals offered by textual, layout, and visual clues are combined into a single extraction model made possible by new deep learning approaches. At the same time, trends within purely textual extraction have shifted toward full-document understanding rather than considering sentences as independent units. With this in mind, it is worth considering the information extraction problem as a whole to motivate solutions that harness textual semantics along with visual and semi-structured layout information. We will discuss these approaches and suggest avenues for future work.

See https://sites.google.com/view/acl-2020-multi-modal-ie for the tutorial material.

T3: Reviewing Natural Language Processing Research (Introductory)

Organizers: Kevin Cohen, Karën Fort, Margot Mieskes and Aurélie Névéol

This tutorial will cover the theory and practice of reviewing research in natural language processing. Heavy reviewing burdens on natural language processing researchers have made it clear that our community needs to increase the size of our pool of potential reviewers. Simultaneously, notable false negatives—rejection by our conferences of work that was later shown to be tremendously important after acceptance by other conferences—have raised awareness of the fact that our reviewing practices leave something to be desired. We do not often talk about false positives with respect to conference papers, but leaders in the field have noted that we seem to have a publication bias towards papers that report high performance, with perhaps not much else of interest in them. It need not be this way. Reviewing is a learnable skill, and you will learn it here via lectures and a considerable amount of hands-on practice.

T4: Stylized Text Generation: Approaches and Applications (Cutting-edge)

Organizers: Lili Mou and Olga Vechtomova

Text generation has played an important role in various applications of natural language processing (NLP), and recent studies, researchers are paying increasing attention to modeling and manipulating the style of the generation text, which we call stylized text generation. In this tutorial, we will provide a comprehensive literature review in this direction. We start from the definition of style and different settings of stylized text generation, illustrated with various applications. Then, we present different settings of stylized generation, such as style-conditioned generation, style-transfer generation, and style-adversarial generation. In each setting, we delve deep into machine learning methods, including embedding learning techniques to represent style, adversarial learning, and reinforcement learning with cycle consistency to match content but to distinguish different styles. We also introduce current approaches to evaluating stylized text generation systems. We conclude our tutorial by presenting the challenges of stylized text generation and discussing future directions, such as small-data training, non-categorical style modeling, and a generalized scope of style transfer (e.g., controlling the syntax as a style).

See https://sites.google.com/view/2020-stylized-text-generation/tutorial for the tutorial material.

T5: Achieving Common Ground in Multi-modal Dialogue (Cutting-edge)

Organizers: Malihe Alikhani and Matthew Stone

All communication aims at achieving common ground (grounding): interlocutors can work together effectively only with mutual beliefs about what the state of the world is, about what their goals are, and about how they plan to make their goals a reality. Computational dialogue research offers some classic results on grouding, which unfortunately offer scant guidance to the design of grounding modules and behaviors in cutting-edge systems. In this tutorial, we focus on three main topic areas: 1) grounding in human-human communication; 2) grounding in dialogue systems; and 3) grounding in multi-modal interactive systems, including image-oriented conversations and human-robot interactions. We highlight a number of achievements of recent computational research in coordinating complex content, show how these results lead to rich and challenging opportunities for doing grounding in more flexible and powerful ways, and canvass relevant insights from the literature on human–human conversation. We expect that the tutorial will be of interest to researchers in dialogue systems, computational semantics and cognitive modeling, and hope that it will catalyze research and system building that more directly explores the creative, strategic ways conversational agents might be able to seek and offer evidence about their understanding of their interlocutors.

See https://github.com/malihealikhani/Grounding_in_Dialogue for the tutorial material.

T6: Commonsense Reasoning for Natural Language Processing (Introductory)

Organizers: Maarten Sap, Vered Shwartz, Antoine Bosselut, Yejin Choi, Dan Roth

Commonsense knowledge, such as knowing that “bumping into people annoys them” or “rain makes the road slippery”, helps humans navigate everyday situations seamlessly. Yet, endowing machines with such human-like commonsense reasoning capabilities has remained an elusive goal of artificial intelligence research for decades. In recent years, commonsense knowledge and reasoning have received renewed attention from the natural language processing (NLP) community, yielding exploratory studies in automated commonsense understanding. We organize this tutorial to provide researchers with the critical foundations and recent advances in commonsense representation and reasoning, in the hopes of casting a brighter light on this promising area of future research. In our tutorial, we will (1) outline the various types of commonsense (e.g., physical, social), and (2) discuss techniques to gather and represent commonsense knowledge, while highlighting the challenges specific to this type of knowledge (e.g., reporting bias). We will then (3) discuss the types of commonsense knowledge captured by modern NLP systems (e.g., large pretrained language models), and (4) present ways to measure systems’ commonsense reasoning abilities. We will finish with (5) a discussion of various ways in which commonsense reasoning can be used to improve performance on NLP tasks, exemplified by an (6) interactive session on integrating commonsense into a downstream task.

See https://tinyurl.com/acl2020-commonsense for the tutorial material.

T7: Integrating Ethics into the NLP Curriculum (Introductory)

Organizers: Emily M. Bender, Dirk Hovy and Alexandra Schofield

To raise awareness among future NLP practitioners and prevent inertia in the field, we need to place ethics in the curriculum for all NLP students—not as an elective, but as a core part of their education. Our goal in this tutorial is to empower NLP researchers and practitioners with tools and resources to teach others about how to ethically apply NLP techniques. We will present both high-level strategies for developing an ethics-oriented curriculum, based on experience and best practices, as well as specific sample exercises that can be brought to a classroom. This highly interactive work session will culminate in a shared online resource page that pools lesson plans, assignments, exercise ideas, reading suggestions, and ideas from the attendees. Though the tutorial will focus particularly on examples for university classrooms, we believe these ideas can extend to company-internal workshops or tutorials in a variety of organizations. In this setting, a key lesson is that there is no single approach to ethical NLP: each project requires thoughtful consideration about what steps can be taken to best support people affected by that project. However, we can learn (and teach) what issues to be aware of, what questions to ask, and what strategies are available to mitigate harm.

T8: Open-Domain Question Answering (Cutting-edge)

Organizers: Danqi Chen and Scott Wen-tau Yih

This tutorial provides a comprehensive and coherent overview of cutting-edge research in open-domain question answering (QA), the task of answering questions using a large collection of documents of diversified topics. We will start by first giving a brief historical background, discussing the basic setup and core technical challenges of the research problem, and then describe modern datasets with the common evaluation metrics and benchmarks. The focus will then shift to cutting-edge models proposed for open-domain QA, including two-stage retriever-reader approaches, dense retriever and end-to-end training, and retriever-free methods. Finally, we will cover some hybrid approaches using both text and large knowledge bases and conclude the tutorial with important open questions. We hope that the tutorial will not only help the audience to acquire up-to-date knowledge but also provide new perspectives to stimulate the advances of open-domain QA research in the next phase.

See https://github.com/danqi/acl2020-openqa-tutorial for the tutorial material.

NEW: Checkout the new blogpost by tutorial chairs describing detailed modalities of ACL2020 tutorials.

The following tutorials will be held on Sunday, July 5th, 2020. Checkout this blog post by tutorial chairs to learn more about the new virtual format of tutorials.

Tutorial Schedule

Acl Time Slots Online

Note that the time slots are mentioned in Seattle, Pacific Daytime Time (UTC/GMT-7). Some tutorials are held twice and some are held once.

6:00 AM to 9:30 AM (Seattle Time)

T1: Interpretability and Analysis in Neural NLP

T5: Achieving Common Ground in Multi-modal Dialogue

T7: Integrating Ethics into the NLP Curriculum

10:30 AM to 2:00 PM (Seattle Time)

T1: Interpretability and Analysis in Neural NLP

T3: Reviewing Natural Language Processing Research

T4: Stylized Text Generation: Approaches and Applications

T7: Integrating Ethics into the NLP Curriculum

3:00 PM to 6:30 PM (Seattle Time)

T2: Multi-modal Information Extraction from Text, Semi-structured, and Tabular Data on the Web

T5: Achieving Common Ground in Multi-modal Dialogue

T6: Commonsense Reasoning for Natural Language Processing

T8: Open-Domain Question Answering

Tutorial Details

T1: Interpretability and Analysis in Neural NLP (cutting-edge)

Organizers: Yonatan Belinkov, Sebastian Gehrmann and Ellie Pavlick

While deep learning has transformed the natural language processing (NLP) field and impacted the larger computational linguistics community, the rise of neural networks is stained by their opaque nature: It is challenging to interpret the inner workings of neural network models, and explicate their behavior. Therefore, in the last few years, an increasingly large body of work has been devoted to the analysis and interpretation of neural network models in NLP. This body of work is so far lacking a common framework and methodology. Moreover, approaching the analysis of modern neural networks can be difficult for newcomers to the field. This tutorial aims to fill this gap and introduce the nascent field of interpretability and analysis of neural networks in NLP. The tutorial will cover the main lines of analysis work, such as structural analyses using probing classifiers, behavioral studies and test suites, and interactive visualizations. We will highlight not only the most commonly applied analysis methods, but also the specific limitations and shortcomings of current approaches, in order to inform participants where to focus future efforts.

T2: Multi-modal Information Extraction from Text, Semi-structured, and Tabular Data on the Web (Cutting-edge)

Organizers: Xin Luna Dong, Hannaneh Hajishirzi, Colin Lockard and Prashant Shiralkar

The World Wide Web contains vast quantities of textual information in several forms: unstructured text, template-based semi-structured webpages (which present data in key-value pairs and lists), and tables. Methods for extracting information from these sources and converting it to a structured form have been a target of research from the natural language processing (NLP), data mining, and database communities. While these researchers have largely separated extraction from web data into different problems based on the modality of the data, they have faced similar problems such as learning with limited labeled data, defining (or avoiding defining) ontologies, making use of prior knowledge, and scaling solutions to deal with the size of the Web. In this tutorial we take a holistic view toward information extraction, exploring the commonalities in the challenges and solutions developed to address these different forms of text. We will explore the approaches targeted at unstructured text that largely rely on learning syntactic or semantic textual patterns, approaches targeted at semi-structured documents that learn to identify structural patterns in the template, and approaches targeting web tables which rely heavily on entity linking and type information. While these different data modalities have largely been considered separately in the past, recent research has started taking a more inclusive approach toward textual extraction, in which the multiple signals offered by textual, layout, and visual clues are combined into a single extraction model made possible by new deep learning approaches. At the same time, trends within purely textual extraction have shifted toward full-document understanding rather than considering sentences as independent units. With this in mind, it is worth considering the information extraction problem as a whole to motivate solutions that harness textual semantics along with visual and semi-structured layout information. We will discuss these approaches and suggest avenues for future work.

See https://sites.google.com/view/acl-2020-multi-modal-ie for the tutorial material.

T3: Reviewing Natural Language Processing Research (Introductory)

Organizers: Kevin Cohen, Karën Fort, Margot Mieskes and Aurélie Névéol

This tutorial will cover the theory and practice of reviewing research in natural language processing. Heavy reviewing burdens on natural language processing researchers have made it clear that our community needs to increase the size of our pool of potential reviewers. Simultaneously, notable false negatives—rejection by our conferences of work that was later shown to be tremendously important after acceptance by other conferences—have raised awareness of the fact that our reviewing practices leave something to be desired. We do not often talk about false positives with respect to conference papers, but leaders in the field have noted that we seem to have a publication bias towards papers that report high performance, with perhaps not much else of interest in them. It need not be this way. Reviewing is a learnable skill, and you will learn it here via lectures and a considerable amount of hands-on practice.

T4: Stylized Text Generation: Approaches and Applications (Cutting-edge)

Organizers: Lili Mou and Olga Vechtomova

Text generation has played an important role in various applications of natural language processing (NLP), and recent studies, researchers are paying increasing attention to modeling and manipulating the style of the generation text, which we call stylized text generation. In this tutorial, we will provide a comprehensive literature review in this direction. We start from the definition of style and different settings of stylized text generation, illustrated with various applications. Then, we present different settings of stylized generation, such as style-conditioned generation, style-transfer generation, and style-adversarial generation. In each setting, we delve deep into machine learning methods, including embedding learning techniques to represent style, adversarial learning, and reinforcement learning with cycle consistency to match content but to distinguish different styles. We also introduce current approaches to evaluating stylized text generation systems. We conclude our tutorial by presenting the challenges of stylized text generation and discussing future directions, such as small-data training, non-categorical style modeling, and a generalized scope of style transfer (e.g., controlling the syntax as a style).

See https://sites.google.com/view/2020-stylized-text-generation/tutorial for the tutorial material.

T5: Achieving Common Ground in Multi-modal Dialogue (Cutting-edge)

Organizers: Malihe Alikhani and Matthew Stone

All communication aims at achieving common ground (grounding): interlocutors can work together effectively only with mutual beliefs about what the state of the world is, about what their goals are, and about how they plan to make their goals a reality. Computational dialogue research offers some classic results on grouding, which unfortunately offer scant guidance to the design of grounding modules and behaviors in cutting-edge systems. In this tutorial, we focus on three main topic areas: 1) grounding in human-human communication; 2) grounding in dialogue systems; and 3) grounding in multi-modal interactive systems, including image-oriented conversations and human-robot interactions. We highlight a number of achievements of recent computational research in coordinating complex content, show how these results lead to rich and challenging opportunities for doing grounding in more flexible and powerful ways, and canvass relevant insights from the literature on human–human conversation. We expect that the tutorial will be of interest to researchers in dialogue systems, computational semantics and cognitive modeling, and hope that it will catalyze research and system building that more directly explores the creative, strategic ways conversational agents might be able to seek and offer evidence about their understanding of their interlocutors.

See https://github.com/malihealikhani/Grounding_in_Dialogue for the tutorial material.

T6: Commonsense Reasoning for Natural Language Processing (Introductory)

Organizers: Maarten Sap, Vered Shwartz, Antoine Bosselut, Yejin Choi, Dan Roth

Commonsense knowledge, such as knowing that “bumping into people annoys them” or “rain makes the road slippery”, helps humans navigate everyday situations seamlessly. Yet, endowing machines with such human-like commonsense reasoning capabilities has remained an elusive goal of artificial intelligence research for decades. In recent years, commonsense knowledge and reasoning have received renewed attention from the natural language processing (NLP) community, yielding exploratory studies in automated commonsense understanding. We organize this tutorial to provide researchers with the critical foundations and recent advances in commonsense representation and reasoning, in the hopes of casting a brighter light on this promising area of future research. In our tutorial, we will (1) outline the various types of commonsense (e.g., physical, social), and (2) discuss techniques to gather and represent commonsense knowledge, while highlighting the challenges specific to this type of knowledge (e.g., reporting bias). We will then (3) discuss the types of commonsense knowledge captured by modern NLP systems (e.g., large pretrained language models), and (4) present ways to measure systems’ commonsense reasoning abilities. We will finish with (5) a discussion of various ways in which commonsense reasoning can be used to improve performance on NLP tasks, exemplified by an (6) interactive session on integrating commonsense into a downstream task.

See https://tinyurl.com/acl2020-commonsense for the tutorial material.

T7: Integrating Ethics into the NLP Curriculum (Introductory)

Organizers: Emily M. Bender, Dirk Hovy and Alexandra Schofield

To raise awareness among future NLP practitioners and prevent inertia in the field, we need to place ethics in the curriculum for all NLP students—not as an elective, but as a core part of their education. Our goal in this tutorial is to empower NLP researchers and practitioners with tools and resources to teach others about how to ethically apply NLP techniques. We will present both high-level strategies for developing an ethics-oriented curriculum, based on experience and best practices, as well as specific sample exercises that can be brought to a classroom. This highly interactive work session will culminate in a shared online resource page that pools lesson plans, assignments, exercise ideas, reading suggestions, and ideas from the attendees. Though the tutorial will focus particularly on examples for university classrooms, we believe these ideas can extend to company-internal workshops or tutorials in a variety of organizations. In this setting, a key lesson is that there is no single approach to ethical NLP: each project requires thoughtful consideration about what steps can be taken to best support people affected by that project. However, we can learn (and teach) what issues to be aware of, what questions to ask, and what strategies are available to mitigate harm.

T8: Open-Domain Question Answering (Cutting-edge)

Organizers: Danqi Chen and Scott Wen-tau Yih

This tutorial provides a comprehensive and coherent overview of cutting-edge research in open-domain question answering (QA), the task of answering questions using a large collection of documents of diversified topics. We will start by first giving a brief historical background, discussing the basic setup and core technical challenges of the research problem, and then describe modern datasets with the common evaluation metrics and benchmarks. The focus will then shift to cutting-edge models proposed for open-domain QA, including two-stage retriever-reader approaches, dense retriever and end-to-end training, and retriever-free methods. Finally, we will cover some hybrid approaches using both text and large knowledge bases and conclude the tutorial with important open questions. We hope that the tutorial will not only help the audience to acquire up-to-date knowledge but also provide new perspectives to stimulate the advances of open-domain QA research in the next phase.

Acl Time Slots Games

See https://github.com/danqi/acl2020-openqa-tutorial for the tutorial material.